How Cities See

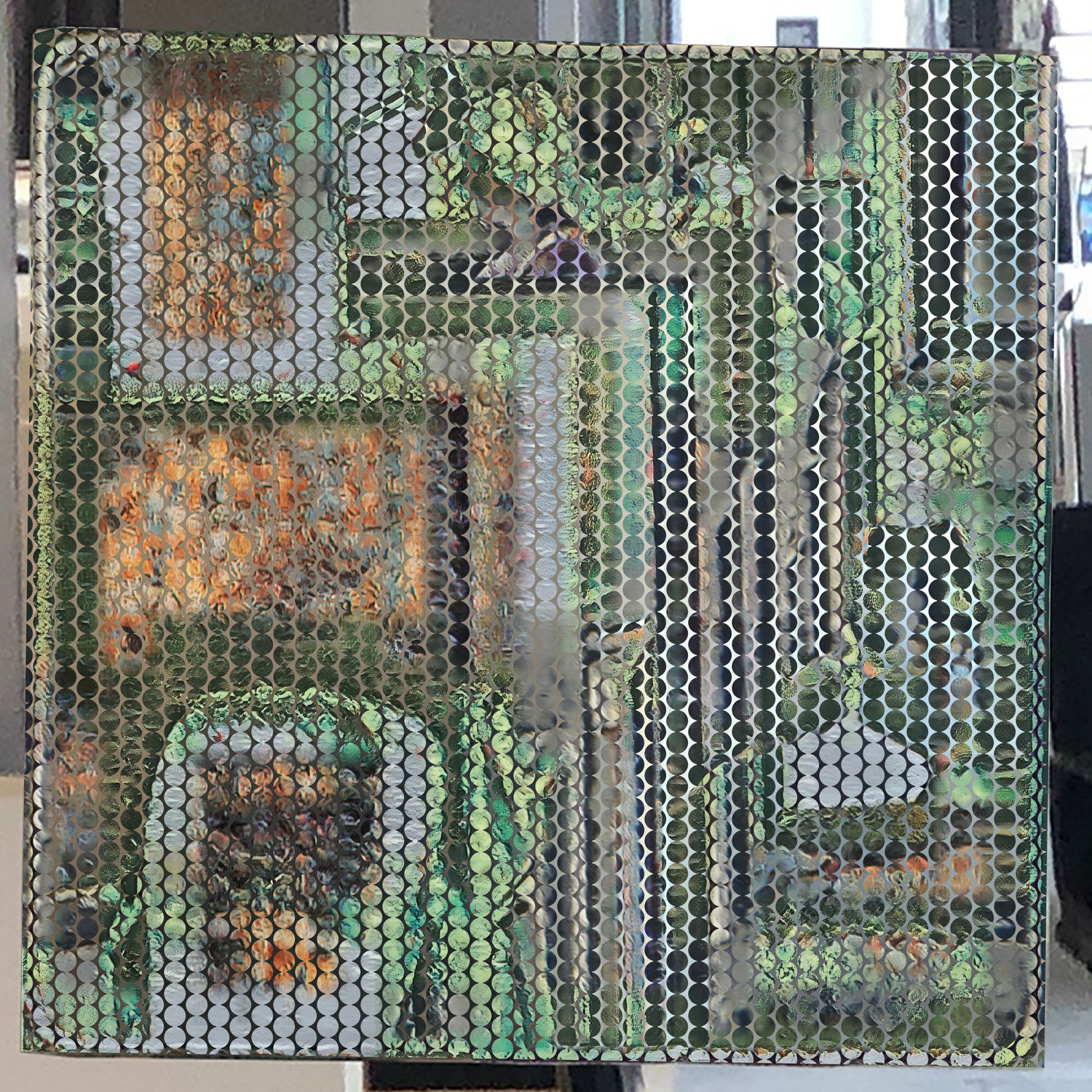

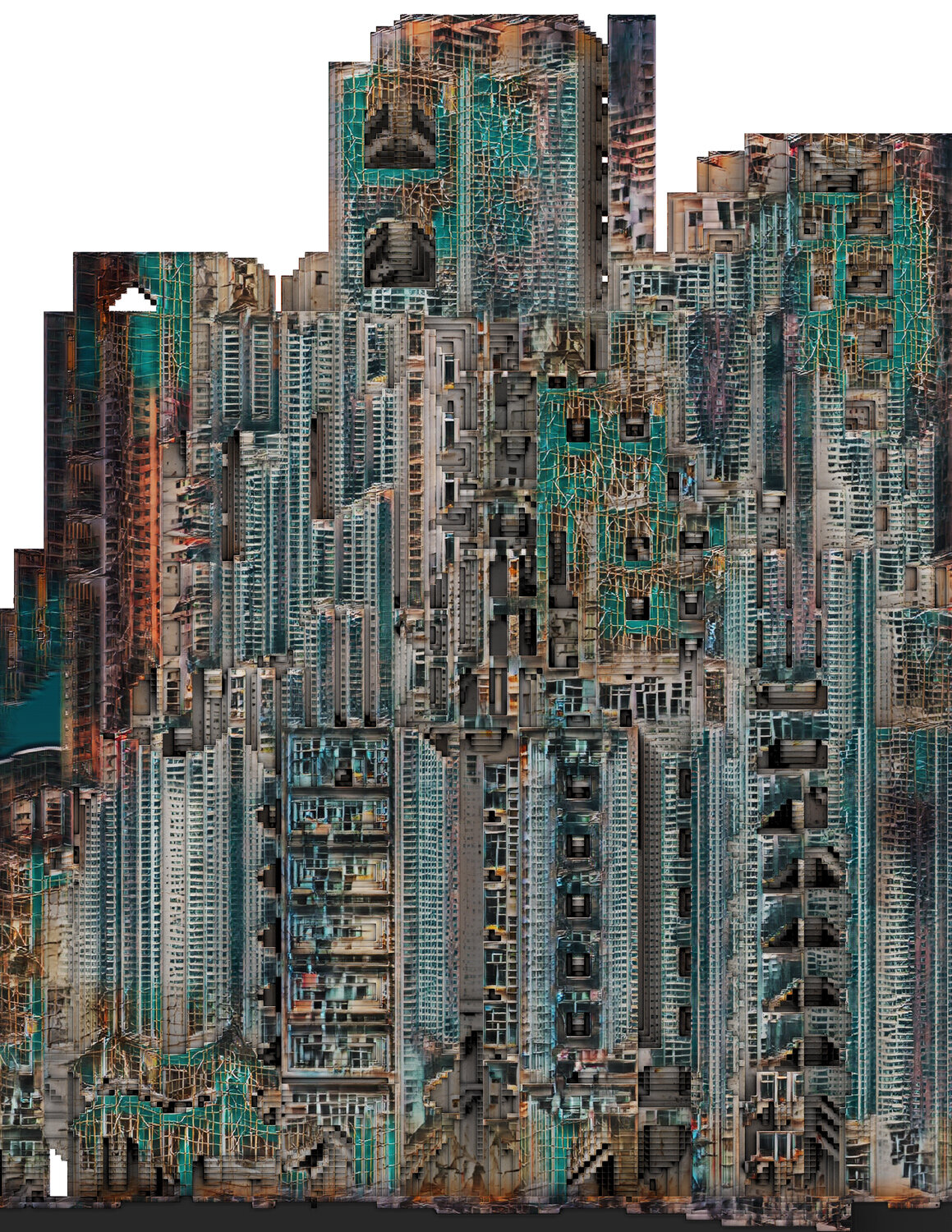

How Cities See uses generative machine learning algorithms to examine existing urban conditions and how they will be augmented and transformed by embedded machine sensing and artificial intelligence, how non-human points of view within these contexts will engage in a dynamic evolutionary process, and how such dynamics may be made visible and useful to urban designers and dwellers alike.

This project inverts a relationship between built environment and its occupants by using machine learning to generate new architectural and urban designs to better suit these new non-human occupants. For example, the project explores how the design of a city street, building and its surrounding furniture can be rethought to improve the navigation ability of a self-driving car.

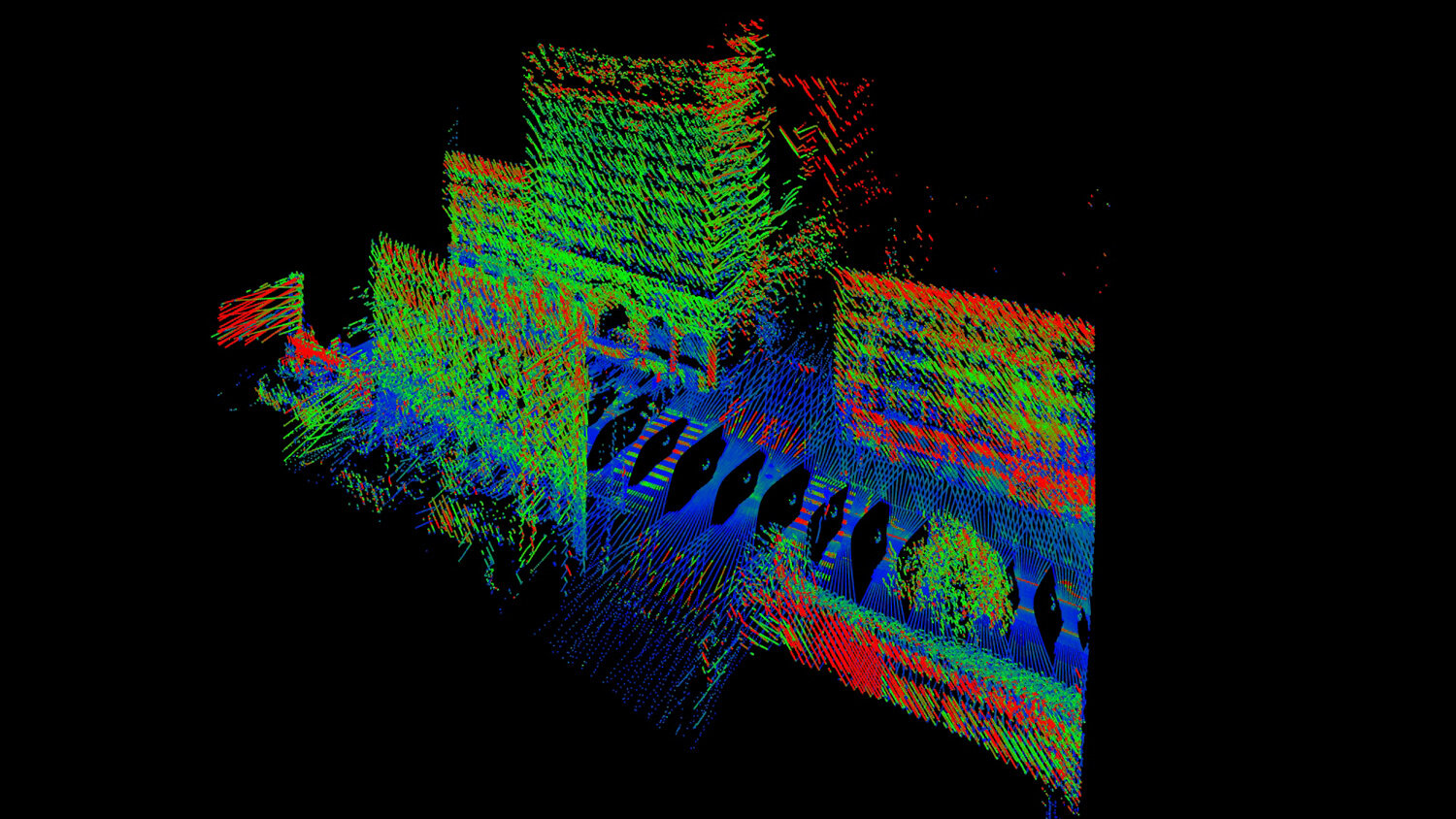

We are developing speculative design scenarios that draw upon data sets (LIDAR and Photogrammetry scans of Downtown Los Angeles) and locate them in plausible narrative contexts which illuminate the conflicts, contradictions, opportunities and innovations that ambient urban AI will bring.

Funded By: Google AMI